This blog was written is by Marcus Catt, a principle consultant for Infuse with over 40 years worldwide experience working within the I.T sector.

Why do we Automate User Interface Testing?

An Introduction

I have to make an admission straight away. This is not an in depth look at tools or a comparison of their capabilities, or a detailed theoretical analysis of the various phases of software development paradigms. Nor is it restricted to discussing UI testing.

It is very much a pragmatic, experience-based set of observations on why some basic theory doesn’t appear to work well in everyday software development and a little bit of a dive into what I think you need a UI automation toolset to give you – not necessarily what the vendors think you might need.

And all this from somebody who has been in the industry over 40 years and has spent nearly 30 of those years working in and around automated UI test tooling. So it’s an informed opinion right? Who knows. It may resonate, it may not.

And I warn the reader now – we are going to go on a bit of a circular journey. I am going to suggest at some point that we should not be doing automated UI testing. I am going to explain what I want from the tools and also why we actually do UI automation testing and just perhaps how we could approach it to get the best available outcome.

In the beginning there was WinRunner.

Well, we might as well start with a lie. It wasn’t the first by a long way. But it was the first that could handle multiple technologies, was easily extendable, interfaced through standard models to management and reporting tools, had a sensible approach to object management and most importantly it worked! Some think (and with fair reason) that it remains the best solution to this day.

25 years later……what do we have now?

WinRunner has long since been superseded and there are now too many functional UI test tools to count, let alone list or describe. There are free tools, partially free (function limitations then pay for the good stuff) and full proprietary licensed tools. You can locally install, run in the cloud, share a platform etc etc etc. Oh, the choice!

Oh, and I forgot to mention “AI”. How silly of me, many of them have AI engines/capabilities to help the construction/maintenance and execution models. Just remember please that most of the time “it” aint artificial and “it” aint intelligent!

The feature list is simply awesome. How do you decide what one to get, and how much your run costs will be?

Software Testing, what is important for you?

Very quickly, I am going to dive off into another topic – why do we test stuff anyway (wow nothing like good segue way is there?). After all, that just slows down developers and gets in the way of delivering cool stuff, right?

Assertion: We test stuff because in reality we know the software is absolute garbage and doesn’t actually work (that is, do everything we specified and everything we wished we had specified but forgot to) and we want to find some basis for deciding its ok to accept the identified RISKS, make informed guesses about the ones we haven’t identified yet and let other people use it for the intended purpose. Yes, get over it. The code written by the developer does not work – if you are lucky it will do what the developer thought it should do – thats not a good definition of working from my perspective.

Side note – We are exclusively discussing functionality here. Non functional aspects are far more significant and yet receive little attention until It is too late. Another topic I think.

If you think what I have asserted is way off the mark then you should probably stop now and not read any more. It’s just not going to work for you.

If we accept that we are trying to expose our risks (early is better than later, right?) then spending a lot of time, effort, money on UI testing does appear at first sight to be a little bit counter intuitive, doesn’t it? UI testing often happens very late in the project release cycle. This means you are carrying a lot of risk into the latter stages. That’s just not cool, is it?

Well, (as those of us who have taken advanced driving courses tend to say) it depends.

A few pseudo-random questions first:

- Where in your development lifecycle do you run the UI tests – is it near the end?

- Do you need a lot of components to be “done” and configured in order to get value?

- Does it take a long to time to run the tests because there are a lot of them?

- Do you use a range of UI/Display models (web browsers/OS variants/Mobile devices)? Let’s give a load of money to Browserstack shall we?

- Do you get any first run failures that require repeat runs just to run and pass?

- Do you compromise what you run on each occasion because its hard? Maybe you run some of them manually?

- Do you really have any idea what your actual code/requirements/usage path coverage is (don’t lie please – and I mean objective measure)?

I thought so!

For a very long time I have felt that by automating the UI testing we are at best using an Elastoplast (other bandages are available) or approaching our quality model from the wrong perspective.

Normally (yes, I accept not always – get over it), I find the decision to automate the UI tests is taken when it is realised the 5 manual E2E tests we wrote on Christmas Eve to support a last-minute emergency go live have now become 3482 tests across 8 browsers and take 16-man days to run. And nobody is volunteering to run them except that very good and very cheap offshore consultancy you have found on the internet from Denpasar. You have somehow missed completely that the REAL (immediate) problem with them is that some of them FAIL, so you have carried all the RISK to the end and its gone bad and you still cant release your software because now your QA model says ALL the tests have to pass and nobody will sign off the release. Life was so much easier when we did not test software wasn’t it?

Now it’s broken you need to fix it – Damn!

Could we have written a unit test to cover the same ground of the failing UI test? I will leave that one with you for another time.

Planned UI testing

Let’s take a small leap and assume Automated UI testing is useful and can be done systematically and well. What is it good for and what features do we want from our toolset?

I am going to list some attributes I think any UI test tooling should have as part of its DNA to be considered useful for a planned UI testing activity (as opposed to a discovered need to do the wrong thing, but quicker and cheaper). This is very much a personal view based on doing it.

- They should just bloody work – sounds obvious I know, but strangely some of them still don’t and the vendors can get very up tight about it.

- Interface through standard APIS to Test Scheduling tooling (CICD – Jenkins/Teamcity/Codebuild) Test management (Jira/MS Tests Manager etc) and Reporting tools (Jira/Grafana etc). This enables scheduling, dashboard reporting, coverage, defect generation and notification models

- Be extendable – I need to trigger something external by time or event – should not be too challenging? I need a plugin template.

- Not have secrets in the construction/object modelling – so I struggle to diagnose why it doesn’t work. Standard models only please – json/xml etc.

- Image accuracy checking – we have variable display heads with the same picture in different resolutions – should not be too hard? And each new screen should be a picture taken as well.

- Data generation randomisers – Why do I need to create/generate all the data?

- Standard tooling Data loaders and cleaner – If I do decide to generate the data because there is a lot of it and it needs to be really specific – I don’t want to use the UI to do it – its slow and I want to clean up afterwards

- Component mocking and replacements models – why do I need a chain of 15 components in the environment just to return an Ajax payload – why can’t I intercept the call and mock the right answer so I can just check the UI flow. If I can do that, I can check the UI much earlier in the cycle and lower my risk right?

- Configuration recording, I need to know exactly what I tested as well as when

- Traffic logging – its all HTTP/XML/TCP anyway, so why do I have to grab it elsewhere in other logging toolsets

- Manage flaky tests and make object model updates easy – this bit to be fair most of them have mastered

- Produce Awesome reports focussed on the User wants and needs – not what the tools would like us to want

- Application Test Case Identification and auto generation – this would be the biggest step forwards and really could be described as AI

These are all things I have had need of, and had to develop many times in order to get significant value from my UI testing. The most significant of them is the last one – auto generation of test cases. And as far as I know it’s a missing item from all UI test tools

Some of what I have listed is included in the latest and best of today’s test tooling – but not all by any means

If I can run UI automation and if it passes, I can release my software with safety and certainty, right? Well, that depends doesn’t it.

Have I wondered off track? Why are we doing this? I have forgotten now

Any test is better than no test – but how much of your software have you tested? Is there a better way? Maybe one that identifies defects earlier and carries less RISK to latter parts of our delivery model? I think I muttered a relevant question on this earlier.

The key problem with most UI/journey-based automation approaches in my opinion is that they carry all the RISK to the end of the release cycle and are the most complex of tests and sometime many of them are not remotely synchronous. Even the async parts are just too async to be automated reliably.

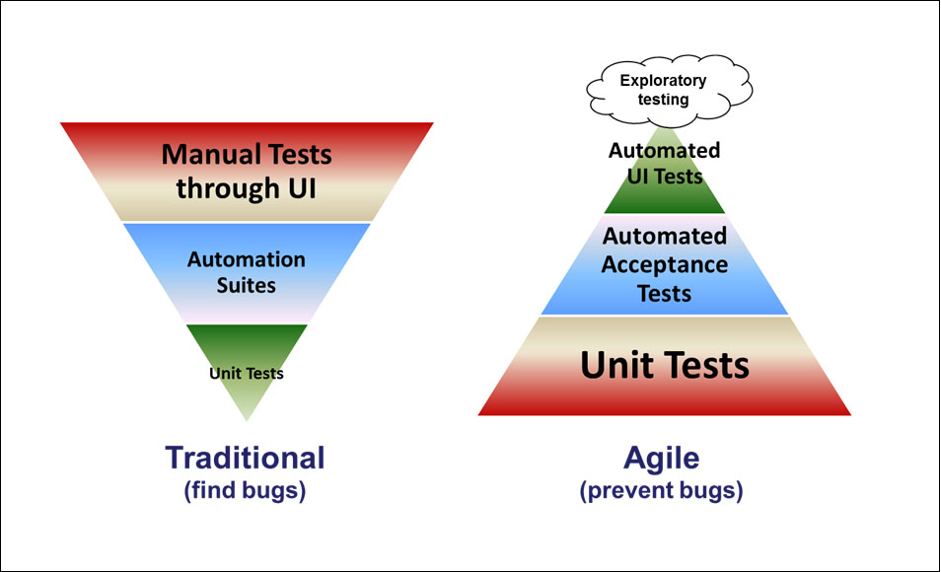

There is another way. Something many of your will have heard of (maybe some of you are or have been like me, an evangelist for this approach). Its known as “The Testing Pyramid”.

A better approach – The Pyramid

Here are three links that explain it much better than I can (worth reading):

- http://www.agilenutshell.com/episodes/41-testing-pyramid

- https://martinfowler.com/articles/practical-test-pyramid.html

- https://automationpanda.com/2018/08/01/the-testing-pyramid/

Everything about it makes sense – why isn’t everyone doing it? I mean actually doing it!

It ensures the code is built on solid foundations. It shifts everything it can to the left, so surfaces RISK early. It provides coverage models. It reduces RISK to an absolute minimum. Statements of progress are real, not forward predicted imaginary guesswork. On paper, it seems obvious that we should all be doing this and we won’t need automation UI test tools for thousands of tests. Just solid Engineering practice. Should not be too hard, should it? What could possibly go wrong?

I am going to make another assertion, get ready.

Assertion: The Testing pyramid does not work in practice for at least two significant reasons

1. it requires discipline and strength of character that is just not sufficiently present or appreciated appropriately by delivery management streams. So we fail to apply the corrective (improvement) actions when bad practice and failure are identified

2. There are not a sufficient number of software engineers available to YOU that will execute code creation of sufficient design quality that will allow you to write the quantity and quality of automated unit and integration tests that form the foundation of this approach.

The Test Pyramid makes perfect sense to me. It will enable rapid delivery of change with certainty and confidence. But you MUST correct your mistakes NOW. Not next week, maybe, never……. they must be addressed NOW. You have to re-write poor code ASAP. That just isn’t popular. Project/Delivery manager standard question: Why do we need this work done? We will accept the RISK, write a ticket and put it on the backlog etc. etc. And so it decays and dies before its ever up and running.

Please note I am not separating the resources into developer and test etc. I don’t care, they are people with skills – call them what you like, deploy them however you want

An Example (this is true and real life – but I will not tell you where – to be completely fair, I have too many of these now):

Some time ago in a land far far away, a developer contacted me saying he was reviewing the change submission for a release to live and there was new code in one of the components proposed and merged etc. But he could not find any acceptance tests in the submission for the proposed change. The software stack had various phases of unit/integration/contract and acceptance tests – all automated and allowed for manual as well. But he could find no new test evidence for the change.

Developer asking for help – so I started looking at the code in a screen share with the developer.

Sure enough, no Acceptance Test submission on this topic anywhere. So, we looked at the integration and unit tests. Nothing!

We started to look at the component Source code (I was not familiar with it at all). After a few minutes I started to laugh. My colleague was very intrigued and wondered why?

I (between giggles) asked if the developer had looked closely at the code. On the face of it, it was well formatted and appeared to have reasonable structure and decomposition. But once you got past “cursory” it became obvious that this code was untestable and some of it (currently) not executable. And looking at the existing Unit Tests showed this in stark detail whoever had written them had clearly struggled and given up.

Side note on Unit testing – Unit testing metrics tell you what you have not tested. Not quite so good at telling you what you have tested well. Again, another topic to explore. And if you get somebody really good at configuring the metric tool? They tell you nothing!

The example above was a seminal moment for me. I knew these guys, they were good Software Engineers and yet had produced something that “worked” but was really poor engineering. I remembered all the other times I had done a deeper dive and got a very similar result. It seemed every time I looked, I found poor code work. The lightning bolt moment was realising that probably most of the codebase in most work projects I looked at was sub-standard and always would be.

And with it, the assumption that you can reduce the work done in latter stages of testing because of the quality, coverage and risk reduction work done at code/compile time was destroyed forever in my mind. I will probably never again seriously suggest its adoption.

Test Pyramid paradigm not available for use? The Impact is?

There is a gap in time availability for any given piece of work and the time needed by the individual to complete the work to the required standard, so there are forever compromises made. The Software Engineer may think the people they are working with understand those compromises and the consequences. Or at least the people who call the shots. Over time it becomes obvious that either they were not understood, or alternatively people were not aware of the compromise at all. Repeat forever everywhere.

All this despite the availability of modern delivery approaches designed to avoid the problems inherently caused by:

- people like to say yes when the mean no.

- people don’t work well under significant pressure. And they start to tell little white lies – mostly through omission

- The maintenance cost (sometimes mistakenly referred to as technical debt) always kill future delivery schedules

- Risk turns up late to the party just as we have told the project board we are 95% done (what does that even mean?)

The main impact of a failed test pyramid is that delivery schedules get elongated and the business lose faith in the IT department and consider outsourcing (amongst many other solutions) because at least they get to understand what the industry thinks of their risk quotient in raw cash terms.

The explicit “fail” is often identified either just before or after go live. Next a recovery or response initiative is constructed. This is nearly always people and process heavy to begin with and creates “assets”

These assets largely exist because of failings at an earlier phase, but that root cause is very often not addressed either through ignorance or the magnitude of the task. We accept the RISK again!

And in that way, the sticking plaster often becomes part of the overall solution moving forwards and requires managing, maintaining and improving in its own right.

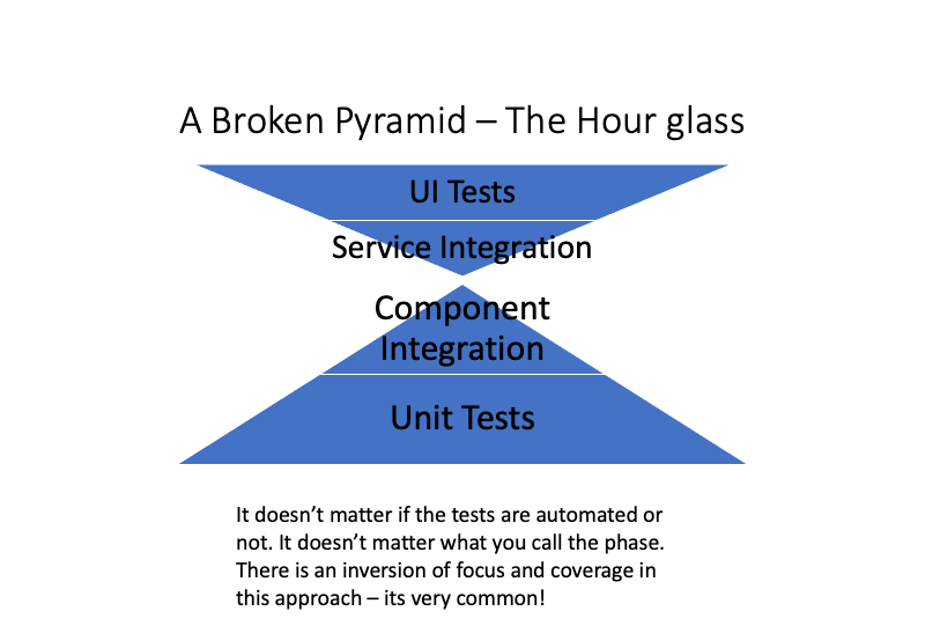

Enter please, The UI Test library! And so we are back at the beginning of this little romp.

The hour glass approach……And back to focussed UI testing (preferably automated)

Let us assume that the individual components required to form “your” system have been “released” based on some form of unit test/integration test/acceptance test sequence and you know want to make sure they work sat a “system” level in a configured environment with appropriate data sets to execute either system acceptance testing and/or user acceptance testing through the primary interface available to users – the UI!

We can script and execute all the tests using manual labour. And/or pick and mix as we choose. If the UI is extraordinarily dynamic and evolving this may yet be an optimal approach.

We can in most cases choose to select some or all of our test cases as candidates for automation.

This does not reduce the RISK we are carrying. It does not remove the rework time when you find a defect. It’s always good to assume you will repeat this cycle a couple of times and not plan for a single pass through the stage.

Automating the UI test cases does however, give you the ability to accelerate and be significantly more deterministic about your execution times and your test runs will always be executed exactly as it says in the script. And that is definitely worth having if you cannot adopt a purer model.

In conclusion

I have previously suggested some attributes I think the UI tools should have, I have also suggested that automated UI testing is perhaps and anti-pattern for good software development. I am going to finish by observing that in today’s world or “rapid” (perhaps over optimistic achievement statements?) development it is an essential part of any pragmatic go live plan and suggest a few essential steps/considerations (outside of the tool selection itself) to make it a success.

- Treat this approach as a software development project in its own right with appropriate standards

- Consider long and hard what your use cases (for this tooling) are and choose wisely

- Build simple UI test models first and add all the main interfaces to work out the integration overheads

- Reporting, Reporting, Reporting – Everyone needs to know and everyone needs to know something different

- Work out where your maintenance headache will come from and make sure your tool choice helps mitigate it. Maintenance of test assets is a huge cost burden.

- Beware proprietary and opaque tooling

- Configuration is king. 100% passing test with one component configured incorrectly or with an incorrect version renders results valueless

- Pay attention to your data set up and destruction. Please avoid using UI functionality to create data to enable the testing of other UI functionality. Despite being fairly obvious you would be amazed how many times I still see it done. And I only ever find out when it goes wrong and you cannot test anything.

- Take snapshot pictures on every screen “submit” so you can see what the last thing typed was.

Good luck and stay safe.

Some interesting further reading for you all to enjoy – well I enjoyed reading them anyway.

- https://medium.com/@dreweaster/technical-debt-recognising-the-rate-of-interest-26ebe2d0f371

- https://defragdev.com/blog/2009/03/30/code-coverage-what-it-is-and-what-it-isnt.html

- https://medium.com/@mateuszroth/why-the-test-pyramid-is-a-bullshit-guide-to-testing-towards-modern-frontend-and-backend-apps-4246e89b87bd

- https://www.tricentis.com/blog/eroding-agile-test-pyramid